Training of an AFPN

Before training an Adaptive Fuzzy Petri Nets, system inputs and outputs must be determined. Suppose that a system is described by weighted fuzzy production rule. Now, all the right hands of the rules are explained as the system outputs. If sufficient training data is available, the parameters can be adjusted well sufficient. As per to various types of system inputs, an Adaptive Fuzzy Petri Nets is described into different types of sub-structures as represented in following figures (a) and (b) and (c). Hence a learning of an entire net can be decomposed into some simpler learning processes referring to these small subnets. Such fact greatly decreased the complexity of the learning algorithm.

Figure (c): Adaptable Parts II(b)

Given weighted fuzzy production rule, this is assumed the threshold of each its antecedent propositions and its certainty factors are identified but the input weights WI are not certain. In the conditions these weights are to be learned, where there are enabled transitions. On the other hand, for the type 1 fuzzy production rules, the input weights are 1, hence only type 2 and type 3 fuzzy production rules weights required to be learned. Moreover if we have a set of training data, via comparing the weights of the input arcs, this is simple to determine that transition is the dominating one at this place. Therefore training can be implemented whether enough data are available.

Example .2

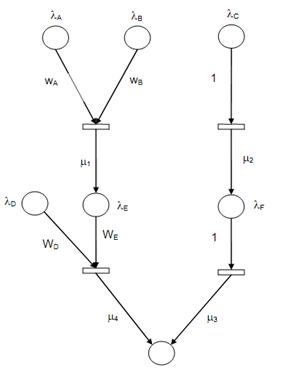

{A, B, C, D, E, F, G} are the connected propositions of a knowledge-based system Γ. Among them exists the following rule as:

R1: If A and B Then E, λA, λB, wA, wB, (CF = μ1)

R2: If C Then F, λC (CF = μ2)

R3: If F Then G, λF (CF = μ3)

R4: If D and E Then G, λD, λE, wD, wE (CF = μ4)

Based upon above translation principle such system is mapped into an Adaptive Fuzzy Petri Nets as shown in following figure.

Figure: A Representation of Knowledge Based System

In following real data is specified for this system as:

λA = 0.5, λB = 0.8, λC = 0.3, λD = 0.8, λE = 0.1, λF = 0.4 and

μ1 = 0.8, μ2 = 0.9, μ3 = 0.6, μ4 = 0.7 .

As the required weights are not known, neural network technique is employed to learn these weights from actual data. While the set of inputs {α ( pA ), α ( pB ), α ( pC ), α ( pD )} are known, a human expert can give the consequent output ψ = {α ( pE ), α ( pF ), α ( pG )} .