Linear Regression:

The process for obtaining the best fit is frequently called the "regression". Linear regression refers for straight line fitting of data.

Linear approximations are frequently satisfactory in an extensive variety of engineering applications.

If we choose a straight line for curve fitting as below:

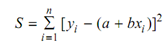

f (x) = a + bx, where a and b are coefficients to be find out. Then for "best fit", the sum S which is to be minimized shall be

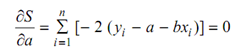

The minimum occurs when

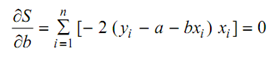

and

These can be simplified & expressed as

∑ yi - ∑ a - ∑ b xi = 0 and ∑ yi xi - ∑ a xi - ∑ b = 0

i.e., na + b ∑ xi = ∑ yi and a ∑ xi + b ∑ = ∑ xi y

These two simultaneous equations might be solved to attained the coefficients a and

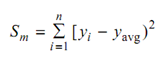

b. The resulting equation f (x) = a + bx then provides the best fit straight line to the given data. The spread of data before regression applied can be n,

where yavg can be average or mean of the given data. The extent of improvement because of curve fitting is given by the expression

r 2 = (Sm - S ) / Sm

where r is the correlation coefficient.

It can be noted down that r has a maximum value of 1.0. Its value will be superior for good correlation!