Image Capture Formats: Video cameras appear in two various image capture formats: progressive and interlaced scan.

Interlaced Scan

It is a technique of enhancing the picture quality of a video transmission without consuming any extra bandwidth. This was invented through the RCA engineer Randall Ballard in the late 1920s.

This was universally utilized in television till the 1970s, while the requirements of computer monitors resulted inside the reintroduction of progressive scan. Whereas interlace can enhance the resolution of still images, on the downside, this causes flicker and different kinds of distortion. Interlace is even used for every standard description TVs and the 1080i HDTV broadcast standard, although not for LCD, micro-mirror as DLP or plasma displays.

These devices need some type of deinterlacing that can include to the cost of the set.

With progressive scan, an image is found, displayed and transmitted in a path same to the text on a page: line by line and from top to bottom.

The interlaced scan pattern in a CRT that is cathode ray tube display would finish that a scan too, but only for all second line and then the subsequent set of video scan lines would be drawn inside the gaps among the lines of the previous scan.

That scan of every second line is termed as a field.

The afterglow of the phosphor of cathode ray tubes, in combination along with the persistence of vision outcomes in two fields being perceived like a continuous image that permits the viewing of complete horizontal detail but along with half the bandwidth that would be needed for a full progressive scan while keeping the necessary CRT refresh rate to prevent flicker.

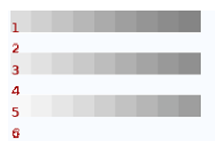

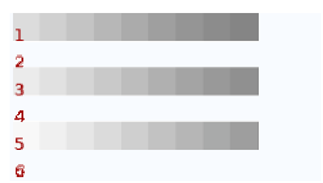

Odd field

Even field

As, after glow or persistence of vision plays a significant part in interlaced scan, only cathode ray tube can display interlaced video directly; the other display technologies need some form of deinterlacing.

In the 1970s, computers and home video game systems started by using TV sets as display devices. At such point, a 480-line NTSC signal was fine beyond the graphics capabilities of low cost computers, thus these systems utilized a simplified video signal wherein each video field scanned directly upon top of the previous one, quite than each line among two lines of the previous field.

From the 1980s computers had outgrown such video systems and required better displays. Solutions from different companies varied widely. As PC monitor signals did not require to be broadcast, they could use far more than the 6, 7 and 8 Mega Hz of bandwidth which NTSC and PAL signals was confined to.

Monitor and graphics card manufacturers initiated newer high resolution standards which once again comprised interlace, in the early 1990s. These monitors ran on extremely high refresh rates, intending which would alleviate flicker issues. This type of monitors proved extremely unpopular. Whereas flicker was not evident on them firstly, eyestrain and lack of focus nevertheless turned into a serious issue. The industry rapidly abandoned this practice, and for the rest of the decade each monitor incorporated the assurance such that their stated resolutions were "non-interlace".