Reference no: EM131096878

10-701 Machine Learning - Spring 2012 - Problem Set 5

Q1. Hidden Markov Model

Hidden Markov Model is an instance of the state space model in which the latent variables are discrete. Let K be the number of hidden states. We use the following notations: x are the observed variables, z are the hidden state variables (we use 1-of-K representation: zk = 1, zj≠k = 0 means the hidden state is k). The transition probabilities are given by a K × K matrix A, where Ajk = p(zn,k = 1|zn-1,j = 1) and the initial state variable z1 are given by a vector of probabilities π: p(z1|π) = k=1∏K πz_1kk. Finally, the emission distribution for a hidden state k is parametrized by φk: p(xn|φk). Let Θ = {A, π, φ}.

1.1 The full likelihood of a data set

If we have a data set X = {x1, . . . , xN}:

1. What is the full likelihood of observed and latent variables: p(X, Z|Θ)? Note Z = {z1, . . . , zN} are the hidden states of the corresponding observations.

2. What is the likelihood of the data set? (e.g. p(X|Θ).

1.2 Expectation-Maximization (EM) for Maximum Likelihood Learning-

We'd like to derive formulas for estimating A and φ to maximize the likelihood of the data set p(X|Θ).

1. Assume we can compute p(X, Z|Θ) in O(1) time complexity, what is the time complexity of computing p(X|Θ)?

We use EM algorithm for this task:

-In the E step, we take the current parameter values and compute the posterior distribution of the latent variables p(Z|X, Θold).

-In the M step, we find the new parameter values by solving an optimization problem:

Θnew = argmaxΘQ(Θ, Θold) (1)

where

Q(Θ, Θold) = ∑Zp(Z|X, Θold) ln p(X, Z|Θ) (2)

2. Show that

Q(Θ, Θold) =k=1∑Kγ(z1k) ln πk + n=2∑Nj=1∑Kk=1∑Kξ(zn-1,j, znk) ln Ajk (3)

+ n=1∑Nk=1∑Kγ(znk) ln p(xn|φk) (4)

where

γ(znk) = Ep(zn|X,Θold)[znk] (5)

ξ(zn-1,j, znk) = Ep(zn-1,zn|X,Θold)[zn-1,j znk] (6)

Show your derivations.

3. Show that

p(X|zn-1, zn) = p(x1, . . . , xn-1|zn-1)p(xn|zn)p(xn+1, . . . xN |zn) (7)

4. In class, we discuss how to compute:

α(zn) = p(x1, . . . , xn, zn) (8)

β(zn) = p(xn+1, . . . , xN |zn) (9)

Show that

ξ(zn-1, zn) = p(zn-1, zn|X) (10)

= α(zn-1)p(xn|zn)p(zn|zn-1)β(zn)/p(X) (11)

How would you compute p(X)?

5. Show how to compute γ(znk) and ξ(zn-1,j , znk) using α(zn), β(zn) and ξ(zn-1, zn).

6. Show that if any elements of the parameters π or A for a hidden Markov model are initially set to 0, then those elements will remain zero in all subsequent updates of the EM algorithm.

1.3 A coin game-

Two students X and Y from Cranberry Lemon University play a stochastic game with a fair coin. X and Y take turn with X going first. All the coin flips are recorded and the game finishes when a sequence of THT first appears. The player who last flips the coin is the winner. Two players can flip the coin many times as follows. At his turn, each time X flips the original coin, he also flips an extra biased coin (p(H) = 0.3.) He stops only if the extra coin lands head, otherwise he repeats flipping the original and extra coins, .... (The flips of this extra coin are not recorded.) On the other hand, at his turn, Y flips the coin until T appears (All of his flips are recorded).

You are given a sequence of recorded coin flips, you would like to infer the winner and as well as the flips of each player.

1. Describe a HMM to model this game.

2. How would you use this HMM model to infer the (most probable) winner and the (most probable) flips of each player?

Q2. Dimensionality Reduction

2.1 Singular value decomposition

In linear algebra, the singular value decomposition (SVD) is a factorization of a real matrix X as:

X = USVT (12)

If the dimension of X is m × n, where without loss of generality m ≥ n, U is an m × n matrix, S is an n × n diagonal matrix and VT is also an n × n matrix. Furthermore, U and V are orthonormal matrices: UUT = I and VVT = I.

2.2 PCA and SVD-

Consider a dataset of observations {xn} where n = 1, . . . , N. We assume that the examples are zero-centered such that x¯ = n=1∑N xn = 0. PCA algorithm computes the covariance matrix:

S = 1/N n=1∑NxnxTn (13)

The principal components {ui} are eigenvectors of S.

Let X = [x1, . . . , xN], a D × N matrix where each column is one example xn. If US'VT is a SVD of X,

1. Show that the principal components {ui} are columns of U. This shows the relationship between PCA and SVD.

2. When the number of dimensions is much larger than the number of data points (D >> N), is it better to do PCA by using the covariance matrix or using SVD?

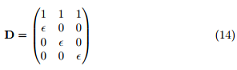

3. Consider the following data set:

where ∈ is a tiny number. Each column is one example. First zero-center the data set and then do PCA using two techniques: 1) by using the covariance matrix and 2) by using SVD. What do you observe? Hints: What is the "dimension" of this dataset? You can use Matlab, try ∈ = 1e - 10, which techniques return sensible result.

Q3. Markov Decision Process

1. A standard MDP is described by a set of states S, a set of actions A, a transition function T, and a reward function R. Where T(s, a, s') gives the probability of transitioning to s' after taking action a in state s, and R(s) gives the immediate reward of being in state s. A k-order MDP is described in the same way with one exception. The transition function T depends on the current state s and also the previous k-1 states. That is, T(sk-1, . . . s1, s, a, s') = p(s', a, s, s1, . . . sk-1) gives the probability of transitioning to state s' given that action a was taken in state s and the previous k - 1 states were (sk-1, . . . , s1).

Given a k-order MDP M = (S; A; T; R) describe how to construct a standard (first-order) MDP M' = (S', A', T', R') that is equivalent to M. Here equivalent means that a solution to M' can be easily converted into a solution to M. Be sure to describe S', A', T', and R'. Give a brief justification your construction.

2. The Q-learning update rule for deterministic MDPs is as follows:

Q(s, a) ← R(s, a) + γ maxa'Q(s', a') (15)

where s' = f(s, a) is the action to be taken. Prove that Q-learning converges in deterministic MDPs.