Reference no: EM133026822

IS71083A Financial Data Modelling Assignment - Implementing an Incremental (online) Training Algorithm for Density Neural Networks

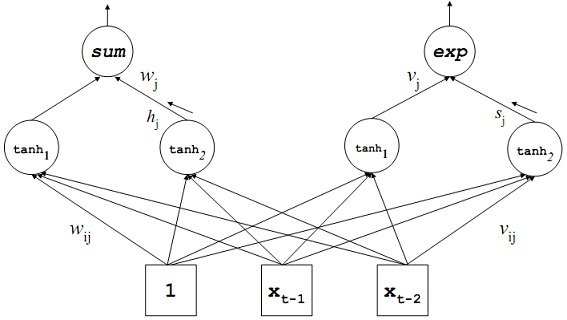

Design and implement an incremental backpropagation algorithm for training density neural networks (DNN). DNN networks compute not only the mean of the target distribution, but also the variance with a second output node. That is why you have to develop the backprop algorithm to train all weights leading to each of the two output nodes in DNN given in the figure below. Start the training process using plausible randomly generated weights. After the design of the training algorithm, apply it to model and forecast a time series, generated using the Mackey-Glass equation (available in the lecture handouts). Use more lagged inputs and hidden units if necessary to achieve better predictions.

Design a working prototype of the incremental backpropagation for DNN density networks in Matlab. The prototype development should include data structures for the input-to-hidden and hidden-to-output connections, and loops for the forward and backward pass.

References -

1. D.A.Nix and E.Weigend (1994). Estimating the mean and variance of the target probability distribution, In: Proc. 1994 IEEE Int. Conf. on Neural Networks (ICNN'94), Orlando, FL, pp. 55-60.

2. Nikolay Nikolaev and Hitoshi Iba (2006). "Adaptive Learning of Polynomial Networks: Genetic Programming, Backpropagation and Bayesian Methods", Springer, New York, pp. 256 - 262.