Reference no: EM133373626

Turing Machines

Models of Computation

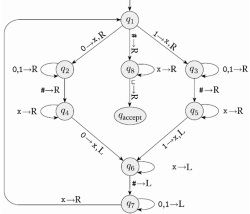

Question 1. Consider the state diagram in Figure 1 for the Turing machine MA deciding the language A = {w#w | w ∈ {0, 1}∗}, which was discussed in class. For the input 01#01, give the sequence of configurations that MA enters (you may follow the similar style as we discussed in class).

Figure 1: The state diagram of the Turing machine MA for Question 1.

Question 2. Consider the following language B = {0n#1n | n ≥ 0}. Give the state diagram of a Turing machine deciding B that follows the following idea: Zigzag across the tape to corresponding positions on either side of # and cross off a 0 on the left side and cross off a 1 on the right side (the idea is similar to the Turing machine for the language A in Question 1, which was discussed in class).

Hint: An easier way to do this is to modify the state diagram in Figure 1.

Question 3. Give implementation-level descriptions of Turing machines that decide the following languages. Recall we discussed in class that an implementation-level description means that you describe the way how the machine moves its head and changes data on the tape, but no need to explain states or transition functions (state diagram is not needed either).

Assignment 4

(a) {w | w ∈ {a, b}∗ and w contains twice as many a's as b's}. You may assume that s is in the language because the number of a's in s (which is zero) is equal to 2 times the number of b's in s (which is also zero).

(b) {aibjck | 0 ≤ i < j < k}.

Question 4. Let a k-PDA be a pushdown automaton that has k stacks. Thus a 0-PDA is an NFA and 1-PDA is a conventional PDA. You already know that 1-PDAs are more powerful (i.e., recognize a larger class of languages) than 0-PDAs. Answer the following questions.

(a) Show that we can use a 2-PDA to simulate a Turing machine (so that the 2-PDA recognizes the same language as the Turing machine).

Note: Since we already know that Turing machines are more powerful than 1-PDAs, the above essentially proves that 2-PDAs are more powerful than 1-PDAs.

(b) Show that 3-PDAs are NOT more powerful than 2-PDAs. (Hint: show that we can use a multi-tape Turing machine to simulate a 3-PDA.)

Question 5. A Turing machine with doubly infinite tape is similar to an ordinary Turing machine, but its tape is infinite to the left as well as to the right. The tape is initially filled with blanks except for the portion that contains the input. Computation is defined as usual except that the head never encounters an end to the tape as it moves leftward. Show that this type of Turing machine recognizes the class of Turing-recognizable languages (i.e., this type of Turing machine has the same power as the ordinary Turing machines). (Hint: Show that we can use an ordinary Turing machine with two tapes to simulate a Turing machine with doubly infinite tape.)

Question 6. Show that the class of decidable languages is closed under the operation of

(a) intersection.

(b) star.

(c) complementation.

Question 7. Show that the class of Turing-recognizable languages is closed under the operation of

(a) intersection.

(b) star.

Question 8. Give a high-level description of a nondeterministic Turing machine that decides

the following language:

{w1w1w2w2 | w1, w2 ∈ {0, 1}∗}.

For example, 101000 is in the language because we can have w1 = 10 and w2 = 0, while 1001 is not in the language.

Recall we discussed in class that a high-level description means that you use English to describe the algorithm but no need to describe how the Turing machine manages its tape or head.